My party has just run through their first combat. It went well, especially considering this was their first combat in Authentic D&D. Over the holiday, I had some time to think about that combat, and whether or not I could use any algorithms to offload any of the work in running the NPCs, particularly dumb ones with simple motivations. In any case, an exploration of that project is sure to be enlightening in itself.

I'll make the code available here.

Another advantage of a system like this is that we can capture a combat in a repeatable (and version-controllable) way. At least to a degree: the beauty of D&D is that a rule system never fully captures the range of human ingenuity, and so to some extent the rule system must always be fluidly updated to match feedback from actual play.

We can break combat action at its simplest level down into:

- move somewhere

- attack something

- interact with something

These actions can be done more than once per turn in any order, depending on the available action points and circumstances, with the goal of breaking the enemy's will through death or morale collapse.

Every action taken by an active participant in the combat should be in service of this goal. Participants who are more intelligent or better trained will select better options than others. [we can also invert this selection function to simulate the actions of PCs, who will choose the worst option in any possible circumstance]

I'll start by tackling movement, which I thought would be the easiest of the three actions. That turned out to not bode well for the others.

Let's consider a battlemap with no obstacles and two actors. We'll play with the actor on the right, giving it 3 AP and the ability to move according to the Authentic D&D rules. Without considering other constraints, this means that for each AP, the actor can move between 1 and 8 hexes in a straight line. This generates the following possibility space:

|

| Movement possibilities: darker hexes mean more possible paths to reach that hex |

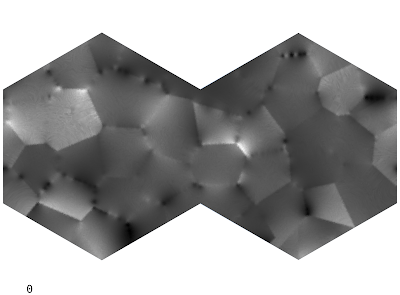

However, there are several constraints we must add, which proved to be non-trivial to program. The actor can only change speed once, they can only rotate left or right 60$^\circ$ at the end of each AP, and they can't move through obstacles (in this case, that is only the other actor). That reduces our space to the following:

|

| Possibility space with constraints added |

The space is still mostly symmetrical, but we can also see the disruption caused by the presence of another actor. Keep in mind also that this is the possibility space for exactly 3 AP; in many cases our actor would only want to move one or two AP, or perhaps even look ahead multiple turns.

|

| Movement in 1 AP, darker hexes are a higher stride |

For example, if the actor on the right wants to attack the actor on the left, they will move at stride 2 for 2 hexes to enter melee and then have 2 AP left to attack. In this way, we can build up a desirability score for each possible hex, and take an optimal or near-optimal combination of actions as a result.

I'm not quite done with movement yet. Right now, we only avoid obstacles of other actors. I'll need to do some thinking on how to add simple static obstacles such as walls or buildings.